In global enterprises, the Azure Virtual WAN (VWAN) enterprise fabric is no longer a purely network-engineering effort. The DevOps engineer has become the connective tissue that translates intent into approved designs, reproducible infrastructure, and validated operations. This article dives deep into why their contributions inside design sessions are vital, and how a single DevOps leader can anchor decision velocity when Microsoft Azure networking is the only platform in scope.

What Makes an Azure VWAN Enterprise Fabric?

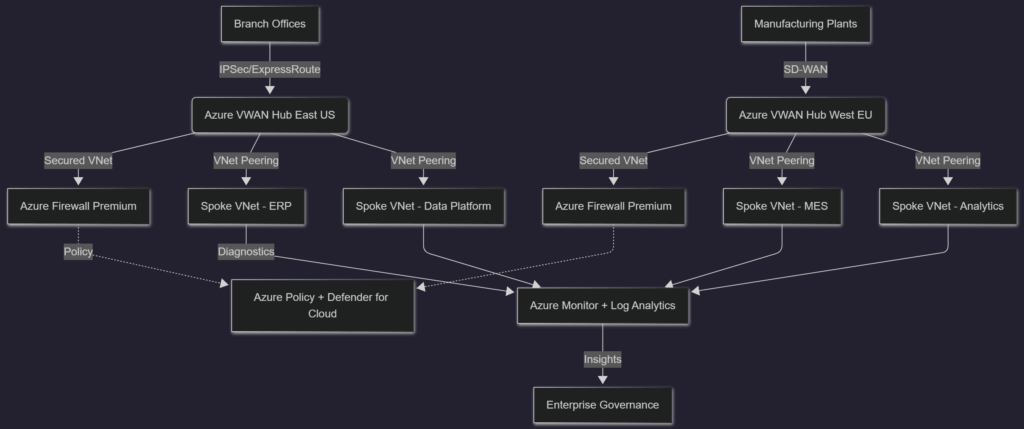

Azure Virtual WAN is Microsoft’s cloud-native backbone for unifying hubs, spokes, security services, and hybrid connectivity. An enterprise fabric layers governance, segmentation, and observability on top, using services such as Azure Firewall, Route Tables, Network Virtual Appliances (NVAs), ExpressRoute gateways, Azure Private DNS, and Azure Monitor. The blueprint is opinionated: it defines how traffic flows, which policies enforce segmentation, where packet inspection happens, and how resiliency is maintained across Azure regions.

Every design decision inside this fabric ripples into automation, telemetry, and release management. That is why the DevOps engineer sits next to the lead network architect when the IP schemas, security boundaries, routing intent, and DDoS baselines are approved.

The diagram highlights how Azure VWAN hubs anchor branch connectivity, while secured hubs enforce centralized inspection with Azure Firewall Premium. Spoke virtual networks host applications, and observability funnels into Azure Monitor and Microsoft Defender for Cloud. The DevOps engineer supplies the Infrastructure-as-Code (IaC) definitions that bind all of those components together, ensuring the fabric can be reproduced in lower environments and validated through automated policy checks before it reaches production.

The DevOps Engineer Inside the Design Room

When an enterprise convenes an Azure networking design session, the agenda typically spans IP allocation, segmentation strategy, traffic inspection points, high availability, routing intent, and operational guardrails. Without a DevOps voice, the session risks delivering diagrams that cannot be codified or tested. The DevOps engineer keeps the conversation anchored around automation contracts:

- Pre-session preparation: Curates Terraform/Bicep modules, Azure Policy definitions, and Azure DevOps/GitHub Actions pipelines that will later enforce the design.

- Live design collaboration: Challenges ambiguous requirements by translating them into IaC parameters, route intent tables, and CI/CD quality gates.

- Post-session validation: Builds deployment rings (sandbox, pilot, production) and integrates Azure Monitor, Azure Load Testing, and Chaos Studio experiments to prove resiliency claims.

Because the DevOps engineer owns the delivery pipeline, they are the vital pillar from a decision perspective—no diagram leaves the room without confirming it can be described in code, secured by Azure role-based access control (RBAC), and monitored through Azure-native observability.

Decision Workflow Where DevOps Unlocks Progress

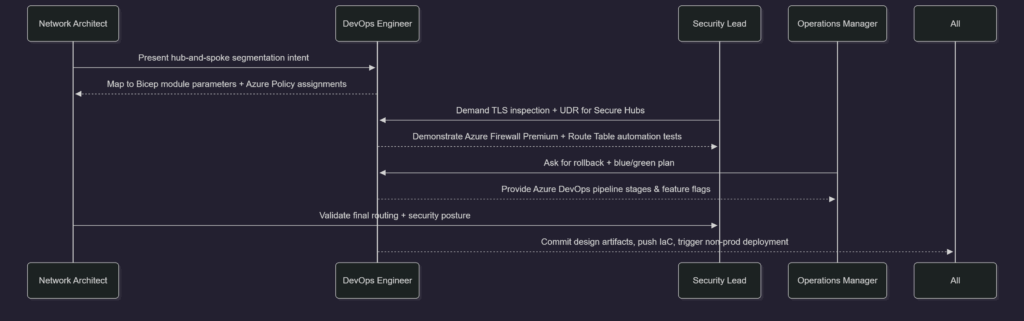

The following real-world inspired flow shows how a DevOps practitioner guides decision checkpoints during a multi-day Azure VWAN design workshop.

Notice how every stakeholder returns to the DevOps engineer to confirm feasibility, automated safeguards, and telemetry coverage. That constant feedback loop is what makes them a decision pillar rather than a downstream executor.

Real-World Example: Northwind Mobility’s Global Fabric

Consider Northwind Mobility, a multinational automotive supplier migrating from a legacy MPLS backbone to Azure Virtual WAN across six continents. Their requirements included deterministic latency for manufacturing execution systems (MES), regional data residency for telematics analytics, and zero-trust segmentation between shop-floor IoT and enterprise workloads. The project charter mandated Microsoft Azure as the only cloud platform.

During the design phase, the DevOps engineer spearheaded several pivotal contributions:

- Produced an Azure Landing Zone accelerator that stitched VWAN hubs, Azure Firewall Manager policies, Private DNS zones, and Azure DDoS Protection into reusable modules.

- Modeled overlapping RFC1918 ranges from 90+ plants, then created automated pre-flight checks that reject deployments with conflicting address spaces.

- Defined Azure Monitor workspaces and Log Analytics queries that proved compliance with ISO 27001 controls before the security team signed off.

- Linked Azure Automation runbooks and Azure Arc-enabled routers to orchestrate failover drills, demonstrating that ExpressRoute and VPN coexistence behaved exactly as promised in the design sessions.

When a board-level milestone required accelerating the rollout in APAC, leadership deferred to the DevOps owner because they held the only end-to-end view of the IaC, deployment rings, and policy gates. That is the essence of a “vital pillar” decision maker: they understand both the architectural narrative and the mechanized path to production.

Governance, Automation, and Observability Pillars

Embedding decisions into code is not optional—it is the guardrail that keeps an Azure VWAN fabric from drifting. Successful DevOps leaders focus on three pillars:

- Governance: Azure Policy, Management Groups, and Defender for Cloud plans enforced through pull-request gates. Every VWAN hub deployment references approved route tables, IP schemas, and DDoS plans.

- Automation: Azure DevOps or GitHub Actions pipelines implementing linting, unit tests (e.g.,

tflint,arm-ttk), integration tests (AZ CLI/PowerShell), and deployment rings (sandbox → pilot → production) with manual checks only where regulators demand them. - Observability: Azure Monitor workbooks, Application Insights dependency maps, Network Watcher Connection Monitor, and Sentinel analytics rules capturing signals across the VWAN mesh.

DevOps engineers harden these pillars by codifying Service Level Objectives (SLOs) for key flows, such as hub-to-hub latency or ExpressRoute egress, and hooking them into Azure Monitor Alerts that feed Microsoft Teams or PagerDuty. Decisions made during design sessions remain alive through telemetry.

Actionable Checklist for Decision Sessions

The following checklist equips DevOps engineers to drive clarity and accelerate approvals during Azure-only networking workshops:

- Map every architectural block to a tracked IaC artifact (Terraform module, Bicep file, or Azure CLI script).

- Preload Azure Policy initiatives for segmentation, resource tagging, and DDoS requirements so decisions automatically generate compliance posture.

- Create a reference diagnostics matrix linking each component (VWAN hubs, Azure Firewall, Route Tables, Virtual Network Gateways) to log categories and retention periods in Azure Monitor.

- Document failure domains and prove them with Azure Chaos Studio experiments targeting VPN Gateway instances, route tables, and Azure Firewall availability zones.

- Publish a RACI that makes the DevOps engineer accountable for “design to deployment fidelity,” ensuring every decision is represented in the backlog.

Reference IaC for Common VWAN Enterprise Fabric Use Cases

Terraform reference implementation (common use cases)

Suggested repo layout (for your readers)

infra/

envs/

sandbox.tfvars

pilot.tfvars

prod.tfvars

scripts/

check_cidrs.py

.github/

workflows/

terraform-vwan-fabric.yml

versions.tf

variables.tf

main.tf

policy-rbac.tf

outputs.tf

ip-plan.json # optional but recommended for preflight validation1) versions.tf (providers + baseline)

terraform {

required_version = ">= 1.5.0"

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 3.0"

}

}

}

provider "azurerm" {

features {}

}

data "azurerm_client_config" "current" {}2) variables.tf (turn design decisions into parameters)

variable "name_prefix" {

type = string

description = "Naming prefix for all resources (e.g., northwind, contoso, nw-sbx)."

}

variable "networking_rg_name" {

type = string

description = "Resource group name hosting the enterprise fabric resources."

}

variable "networking_rg_location" {

type = string

description = "Location of the resource group (RG location can differ from hub locations)."

}

variable "vwan_location" {

type = string

description = "Azure region used as the Virtual WAN resource location (management plane)."

}

variable "hubs" {

description = <<EOT

Map of hubs keyed by short name (e.g., weu, eus2).

- address_prefix is the Virtual Hub address prefix (NOT a VNet CIDR).

- secured=true deploys hub-integrated Azure Firewall Premium.

EOT

type = map(object({

location = string

address_prefix = string

secured = bool

firewall_public_ip_count = optional(number, 1)

}))

}

variable "spokes" {

description = <<EOT

Map of spoke VNets keyed by workload name.

Each spoke connects to a hub via vHub connection.

EOT

type = map(object({

location = string

address_space = list(string)

hub_key = string

ddos_enabled = optional(bool, true)

}))

}

variable "private_dns_zones" {

type = set(string)

default = []

description = "Private DNS zones to create and link to all spokes (e.g., privatelink.vaultcore.azure.net)."

}

variable "law_retention_days" {

type = number

default = 30

description = "Log Analytics retention in days."

}

variable "deployment_principal_object_id" {

type = string

default = ""

description = "Optional: Object ID to grant Contributor on the RG (used by pipeline identity)."

}3) main.tf (vWAN + hubs + secured hub + spokes + DNS + diagnostics)

resource "azurerm_resource_group" "net" {

name = var.networking_rg_name

location = var.networking_rg_location

}

resource "azurerm_log_analytics_workspace" "law" {

name = "${var.name_prefix}-law"

location = azurerm_resource_group.net.location

resource_group_name = azurerm_resource_group.net.name

sku = "PerGB2018"

retention_in_days = var.law_retention_days

}

resource "azurerm_virtual_wan" "vwan" {

name = "${var.name_prefix}-vwan"

location = var.vwan_location

resource_group_name = azurerm_resource_group.net.name

type = "Standard"

allow_branch_to_branch_traffic = true

allow_vnet_to_vnet_traffic = true

}

resource "azurerm_virtual_hub" "hub" {

for_each = var.hubs

name = "${var.name_prefix}-hub-${each.key}"

location = each.value.location

resource_group_name = azurerm_resource_group.net.name

virtual_wan_id = azurerm_virtual_wan.vwan.id

address_prefix = each.value.address_prefix

sku = "Standard"

}

# Shared Firewall Policy (Premium) for all secured hubs

resource "azurerm_firewall_policy" "fwpol" {

name = "${var.name_prefix}-fwpol-premium"

location = azurerm_resource_group.net.location

resource_group_name = azurerm_resource_group.net.name

sku = "Premium"

threat_intelligence_mode = "Alert"

dns {

proxy_enabled = true

}

}

# Example baseline rule group (keep it simple for the article)

resource "azurerm_firewall_policy_rule_collection_group" "baseline" {

name = "rcg-baseline"

firewall_policy_id = azurerm_firewall_policy.fwpol.id

priority = 100

application_rule_collection {

name = "app-allow-microsoft-fqdn-tags"

priority = 100

action = "Allow"

rule {

name = "allow-azurecloud"

source_addresses = ["10.0.0.0/8"] # Replace with your enterprise RFC1918 strategy

destination_fqdn_tags = ["AzureCloud"]

protocols {

type = "Https"

port = 443

}

}

}

}

# Hub-integrated Azure Firewall Premium (secured hub pattern)

resource "azurerm_firewall" "hub_fw" {

for_each = { for k, v in var.hubs : k => v if v.secured }

name = "${var.name_prefix}-afw-${each.key}"

location = azurerm_virtual_hub.hub[each.key].location

resource_group_name = azurerm_resource_group.net.name

sku_name = "AZFW_Hub"

sku_tier = "Premium"

firewall_policy_id = azurerm_firewall_policy.fwpol.id

virtual_hub {

virtual_hub_id = azurerm_virtual_hub.hub[each.key].id

public_ip_count = each.value.firewall_public_ip_count

}

}

# Route table that forces default traffic to the Azure Firewall (per secured hub)

resource "azurerm_virtual_hub_route_table" "spokes_to_fw" {

for_each = azurerm_firewall.hub_fw

name = "rt-spokes-default-to-fw"

virtual_hub_id = azurerm_virtual_hub.hub[each.key].id

labels = ["spokes"]

route {

name = "default-to-firewall"

destinations_type = "CIDR"

destinations = ["0.0.0.0/0"]

next_hop_type = "ResourceId"

next_hop = azurerm_firewall.hub_fw[each.key].id

}

}

# Optional DDoS plan (applies to spoke VNets)

resource "azurerm_network_ddos_protection_plan" "ddos" {

name = "${var.name_prefix}-ddos"

location = azurerm_resource_group.net.location

resource_group_name = azurerm_resource_group.net.name

}

resource "azurerm_virtual_network" "spoke" {

for_each = var.spokes

name = "${var.name_prefix}-vnet-${each.key}"

location = each.value.location

resource_group_name = azurerm_resource_group.net.name

address_space = each.value.address_space

ddos_protection_plan {

id = azurerm_network_ddos_protection_plan.ddos.id

enable = each.value.ddos_enabled

}

}

# Minimal subnet (expand as needed in real deployments)

resource "azurerm_subnet" "spoke_app" {

for_each = var.spokes

name = "snet-app"

resource_group_name = azurerm_resource_group.net.name

virtual_network_name = azurerm_virtual_network.spoke[each.key].name

address_prefixes = ["${cidrsubnet(each.value.address_space[0], 8, 1)}"]

}

# Connect spokes to hubs

locals {

# For each hub, pick the route table spokes should associate to:

# - secured hub: our custom "default-to-fw" route table

# - non-secured hub: hub default route table

spoke_associated_route_table = {

for hub_key, hub in var.hubs :

hub_key => (

hub.secured

? azurerm_virtual_hub_route_table.spokes_to_fw[hub_key].id

: azurerm_virtual_hub.hub[hub_key].default_route_table_id

)

}

}

resource "azurerm_virtual_hub_connection" "spoke_conn" {

for_each = var.spokes

name = "${var.name_prefix}-conn-${each.key}"

virtual_hub_id = azurerm_virtual_hub.hub[each.value.hub_key].id

remote_virtual_network_id = azurerm_virtual_network.spoke[each.key].id

# This tells Azure to use the secured hub for Internet-bound flows (when Firewall is present)

internet_security_enabled = var.hubs[each.value.hub_key].secured

routing {

associated_route_table_id = local.spoke_associated_route_table[each.value.hub_key]

propagated_route_table {

labels = ["default"]

route_table_ids = [

azurerm_virtual_hub.hub[each.value.hub_key].default_route_table_id

]

}

}

}

# Private DNS Zones + links to all spokes

resource "azurerm_private_dns_zone" "zones" {

for_each = var.private_dns_zones

name = each.value

resource_group_name = azurerm_resource_group.net.name

}

locals {

dns_links = {

for z in var.private_dns_zones :

z => {

zone_name = z

}

}

}

resource "azurerm_private_dns_zone_virtual_network_link" "spoke_links" {

for_each = {

for pair in setproduct(tolist(var.private_dns_zones), keys(var.spokes)) :

"${pair[0]}::${pair[1]}" => { zone = pair[0], spoke = pair[1] }

}

name = "${var.name_prefix}-pdns-${replace(each.value.zone, ".", "-")}-${each.value.spoke}"

resource_group_name = azurerm_resource_group.net.name

private_dns_zone_name = azurerm_private_dns_zone.zones[each.value.zone].name

virtual_network_id = azurerm_virtual_network.spoke[each.value.spoke].id

registration_enabled = false

}

# Azure Firewall diagnostics -> Log Analytics (robust: discovers categories dynamically)

data "azurerm_monitor_diagnostic_categories" "fw" {

for_each = azurerm_firewall.hub_fw

resource_id = each.value.id

}

resource "azurerm_monitor_diagnostic_setting" "fw_to_law" {

for_each = azurerm_firewall.hub_fw

name = "diag-to-law"

target_resource_id = each.value.id

log_analytics_workspace_id = azurerm_log_analytics_workspace.law.id

dynamic "enabled_log" {

for_each = data.azurerm_monitor_diagnostic_categories.fw[each.key].log_category_types

content {

category = enabled_log.value

enabled = true

}

}

dynamic "metric" {

for_each = data.azurerm_monitor_diagnostic_categories.fw[each.key].metric_category_types

content {

category = metric.value

enabled = true

}

}

}4) policy-rbac.tf (governance gates that match your article narrative)

A) Example: “Require tags” policy (design-session friendly)

This is the kind of thing a DevOps engineer brings into the room to prevent “pretty diagrams, messy reality.”

resource "azurerm_policy_definition" "require_tags" {

name = "${var.name_prefix}-require-tags"

policy_type = "Custom"

mode = "Indexed"

display_name = "Require mandatory tags on all resources"

metadata = jsonencode({

category = "Tags"

})

parameters = jsonencode({

tagNames = {

type = "Array"

metadata = { displayName = "Tag names" }

defaultValue = ["Owner", "CostCenter"]

}

})

policy_rule = jsonencode({

if = {

anyOf = [

{ field = "[concat('tags[', parameters('tagNames')[0], ']')]", exists = "false" },

{ field = "[concat('tags[', parameters('tagNames')[1], ']')]", exists = "false" }

]

}

then = { effect = "deny" }

})

}

resource "azurerm_policy_assignment" "require_tags" {

name = "${var.name_prefix}-require-tags"

display_name = "Require mandatory tags"

scope = "/subscriptions/${data.azurerm_client_config.current.subscription_id}"

policy_definition_id = azurerm_policy_definition.require_tags.id

}B) Optional: give your pipeline identity Contributor on the fabric RG

resource "azurerm_role_assignment" "pipeline_rg_contributor" {

count = var.deployment_principal_object_id != "" ? 1 : 0

scope = azurerm_resource_group.net.id

role_definition_name = "Contributor"

principal_id = var.deployment_principal_object_id

}5) outputs.tf (useful for ops + documentation)

output "virtual_wan_id" {

value = azurerm_virtual_wan.vwan.id

}

output "hub_ids" {

value = { for k, v in azurerm_virtual_hub.hub : k => v.id }

}

output "firewall_ids" {

value = { for k, v in azurerm_firewall.hub_fw : k => v.id }

}

output "spoke_vnet_ids" {

value = { for k, v in azurerm_virtual_network.spoke : k => v.id }

}Example deployment rings (tfvars)

envs/sandbox.tfvars

name_prefix = "nw-sbx"

networking_rg_name = "rg-nw-vwan-sbx"

networking_rg_location = "westeurope"

vwan_location = "westeurope"

hubs = {

weu = {

location = "westeurope"

address_prefix = "10.10.0.0/23"

secured = true

firewall_public_ip_count = 1

}

}

spokes = {

app1 = {

location = "westeurope"

address_space = ["10.20.0.0/16"]

hub_key = "weu"

ddos_enabled = true

}

}

private_dns_zones = [

"privatelink.vaultcore.azure.net",

"privatelink.blob.core.windows.net"

]

law_retention_days = 30

# deployment_principal_object_id = "00000000-0000-0000-0000-000000000000"Your pilot/prod tfvars are usually the same shape with different prefixes, CIDRs, and possibly more hubs/spokes.

CI/CD: GitHub Actions pipeline (Terraform + ring flow + quality gates)

.github/workflows/terraform-vwan-fabric.yml

name: terraform-vwan-enterprise-fabric

on:

pull_request:

paths:

- "infra/**"

push:

branches: ["main"]

paths:

- "infra/**"

permissions:

id-token: write

contents: read

env:

TF_IN_AUTOMATION: "true"

TF_INPUT: "false"

ARM_USE_OIDC: "true"

jobs:

validate-and-plan:

runs-on: ubuntu-latest

defaults:

run:

working-directory: infra

strategy:

matrix:

ring: [sandbox, pilot]

steps:

- uses: actions/checkout@v4

- name: Preflight - CIDR overlap check

run: |

python3 scripts/check_cidrs.py ip-plan.json

- uses: hashicorp/setup-terraform@v3

- name: Azure login (OIDC)

uses: azure/login@v2

with:

client-id: ${{ secrets.AZURE_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

- name: Terraform fmt

run: terraform fmt -check -recursive

- name: Terraform init

run: terraform init

- name: Terraform validate

run: terraform validate

- name: Terraform plan (${{ matrix.ring }})

run: terraform plan -var-file="envs/${{ matrix.ring }}.tfvars" -out="tfplan-${{ matrix.ring }}.bin"

apply-production:

# Production apply runs only on main, and should be protected by GitHub "Environments" approvals.

if: github.ref == 'refs/heads/main'

needs: [validate-and-plan]

runs-on: ubuntu-latest

environment: production

defaults:

run:

working-directory: infra

steps:

- uses: actions/checkout@v4

- uses: hashicorp/setup-terraform@v3

- name: Azure login (OIDC)

uses: azure/login@v2

with:

client-id: ${{ secrets.AZURE_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

- name: Terraform init

run: terraform init

- name: Terraform apply (prod)

run: terraform apply -auto-approve -var-file="envs/prod.tfvars"Preflight IP overlap checks (the “stop bad decisions early” tool)

ip-plan.json (example)

{

"networks": [

{ "name": "hub-weu", "cidr": "10.10.0.0/23" },

{ "name": "spoke-app1-weu", "cidr": "10.20.0.0/16" }

]

}scripts/check_cidrs.py

#!/usr/bin/env python3

import json

import sys

import ipaddress

from itertools import combinations

def main(path: str) -> int:

with open(path, "r", encoding="utf-8") as f:

data = json.load(f)

nets = data.get("networks", [])

parsed = []

for item in nets:

name = item["name"]

cidr = item["cidr"]

try:

net = ipaddress.ip_network(cidr, strict=True)

except ValueError as e:

print(f"[FAIL] Invalid CIDR for {name}: {cidr} ({e})")

return 2

parsed.append((name, net))

overlaps = []

for (n1, a), (n2, b) in combinations(parsed, 2):

if a.overlaps(b):

overlaps.append((n1, str(a), n2, str(b)))

if overlaps:

print("[FAIL] CIDR overlap detected:")

for n1, a, n2, b in overlaps:

print(f" - {n1} {a} overlaps {n2} {b}")

return 1

print("[OK] No CIDR overlaps detected.")

return 0

if __name__ == "__main__":

if len(sys.argv) != 2:

print("Usage: check_cidrs.py <ip-plan.json>")

sys.exit(2)

sys.exit(main(sys.argv[1]))Optional “common use case” add-ons you can mention (without drowning the article)

These are the next practical extensions readers usually ask for:

A) Hybrid connectivity (ExpressRoute / VPN) — pattern stub

In a real enterprise, circuit IDs, sites, links, and BGP are org-specific, so it’s common to show this as a pattern in the article:

# Example only: ExpressRoute Gateway in a vHub (then you attach ExpressRoute connections)

resource "azurerm_express_route_gateway" "ergw" {

for_each = { for k, v in var.hubs : k => v } # enable/disable via additional flags if desired

name = "${var.name_prefix}-ergw-${each.key}"

location = azurerm_virtual_hub.hub[each.key].location

resource_group_name = azurerm_resource_group.net.name

virtual_hub_id = azurerm_virtual_hub.hub[each.key].id

scale_units = 1

}

# ExpressRoute connection depends on your ExpressRoute circuit / peering IDs

# resource "azurerm_express_route_connection" "..." { ... }B) Observability extras

- Add Network Watcher + Connection Monitor for critical hub-to-hub or spoke-to-onprem flows.

- Add Microsoft Sentinel on the same Log Analytics workspace for detections tied to firewall logs.